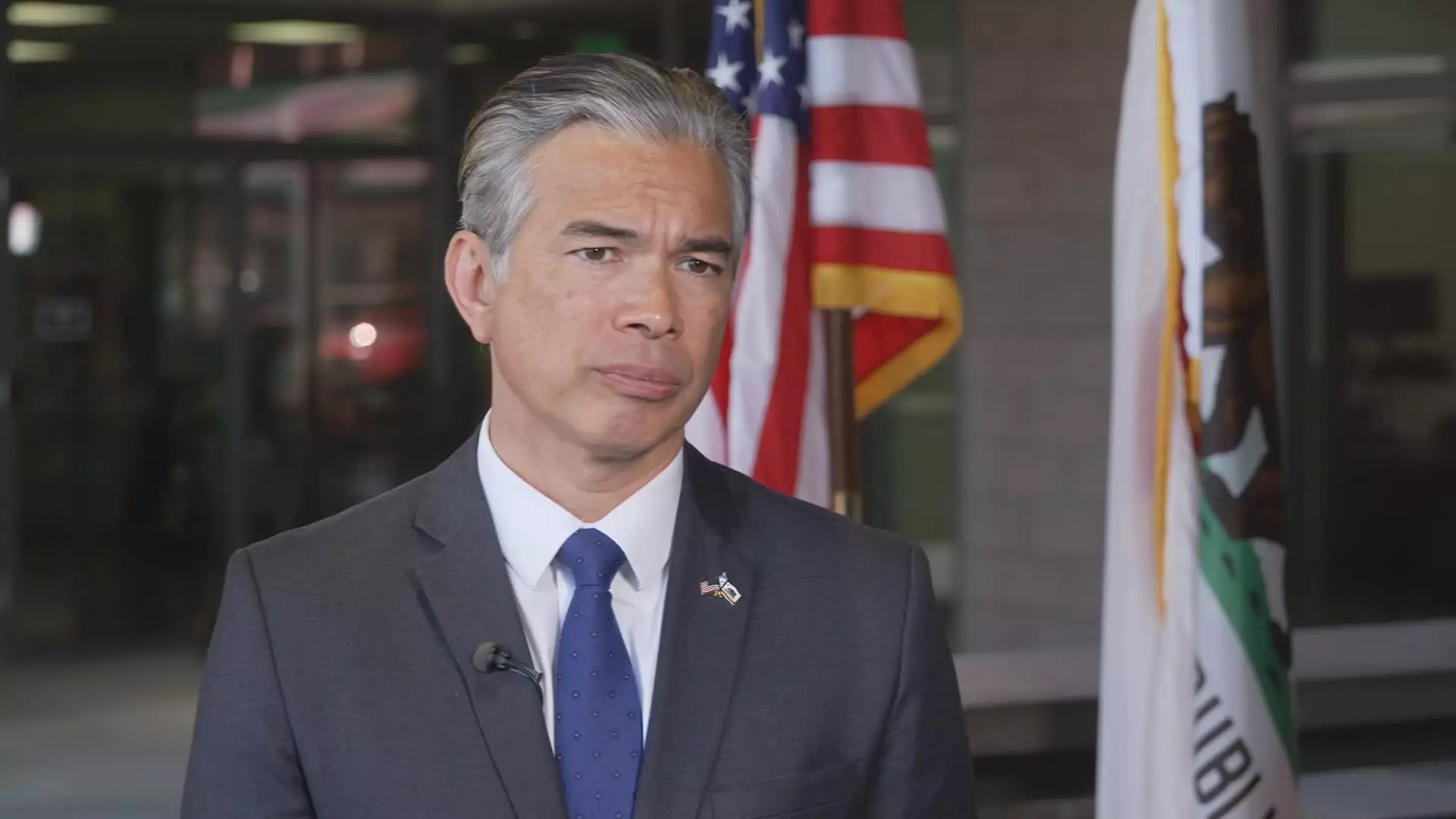

As the November elections loom closer, California Attorney General Rob Bonta has made a noteworthy call to action aimed at big tech companies, urging them to enhance their efforts in protecting voters from misleading information. The landscape of social media and artificial intelligence has evolved into a crucial source for millions of Californians seeking reliable news and information about upcoming elections. With this influence comes a significant responsibility; Bonta emphasizes that these platforms must not become tools for deception or intimidation, which threatens to undermine democratic practices.

Bonta’s message is directed at key industry leaders, including those from Alphabet, Meta, Microsoft, OpenAI, Reddit, TikTok, X (formerly Twitter), and YouTube. His letter explicitly outlines the potential legal ramifications of using these platforms for misinformation. California law prohibits actions that interfere with a citizen’s voting rights, which includes anything from misleading information about polling places to intimidation tactics designed to deter participation. By explicitly reminding these executives of the laws that govern our electoral processes, Bonta aims to instill a more conscientious approach among tech giants.

As the digital age continues to advance, the emergence of artificial intelligence has opened new avenues for information dissemination—both good and bad. In the weeks leading up to the elections, instances of AI-generated misinformation have surfaced prominently in the political discourse. Celebrities like Taylor Swift have found themselves unwillingly embroiled in fabricated narratives, such as false endorsements manipulated by advanced technology. Swift’s recent rejection of unfounded claims linking her to Donald Trump showcases the potential for AI to distort realities, further exacerbating polarization in political views.

On the flip side, misuse of AI tools by influential figures, including Elon Musk, who circulated an AI-generated image portraying Vice President Kamala Harris in a compromising light, highlights the urgency of Bonta’s concerns. These illustrations can inadvertently create harmful stereotypes or malign individuals, calling into question the ethical boundaries within which tech platforms operate. The evolution of platforms like Google’s Gemini and OpenAI’s Dall-E brings about a pressing need for regulatory frameworks that constrain such creativity to responsible parameters, especially during election cycles.

The legal framework surrounding election laws in California is robust, with specific statutes aimed at curtailing political deception directly affecting candidates. Bonta’s letter clarifies that disseminating misleading media about candidates within a stipulated timeframe before the elections is not only unethical but illegal, regrettably underreported consequences of AI misuse. Hence, the onus now lies heavily on tech companies to self-regulate and develop stringent protocols that prevent the dissemination of fake news that could skew voter perceptions or electoral outcomes.

Ultimately, as voters increasingly turn to social media for electoral information, the role of technology companies in the fight against misinformation becomes paramount. Their commitment to protecting voter rights and ensuring a fair electoral process could shape public trust in both technology and governance in a digitally dominated future. The dialogue initiated by Bonta serves as a call for vigilance and responsibility, urging tech leaders to prioritize democracy over profit and political agendas. As we approach the elections, one cannot overlook the critical interplay between technology, law, and the fundamental rights of citizens.