DeepSeek, a Chinese AI startup, has made significant strides in the world of artificial intelligence, particularly within the realm of open-source technologies. Originating from the quantitative hedge fund High-Flyer Capital Management, DeepSeek has set its sights on bridging the gap between open-source and closed proprietary AI models. The release of their newly developed ultra-large model, DeepSeek-V3, represents a strategic effort to compete against industry giants and promote innovation within the AI ecosystem. With this latest model boasting an impressive 671 billion parameters, DeepSeek continues to challenge the existing norms, signaling a shift in the landscape of AI development.

DeepSeek-V3 is characterized by its sophisticated mixture-of-experts (MoE) architecture, which optimizes performance by activating only a selected subset of parameters when processing tasks. This approach is particularly beneficial for efficiency, as it minimizes computational load while still delivering high-quality outputs. Notably, out of its 671 billion parameters, the model only utilizes 37 billion for each task, a strategy that ensures its adaptability and resource management.

Compared to its predecessor, DeepSeek-V2, the new model not only retains the core multi-head latent attention architecture but also introduces novel enhancements to improve performance. Among these advancements is the auxiliary loss-free load-balancing strategy, which dynamically distributes workloads among experts, maintaining balance and efficiency without sacrificing overall model performance. Additionally, DeepSeek-V3 incorporates a multi-token prediction (MTP) feature, allowing it to generate multiple tokens simultaneously, resulting in an impressive output rate of 60 tokens per second.

The development of DeepSeek-V3 required extensive training on a remarkable dataset containing 14.8 trillion diverse and high-quality tokens. The training process involved a two-stage context length extension, first expanding the maximum context length from 32,000 to 128,000 tokens. This significant enhancement enables the model to grasp broader contexts, facilitating its prowess in understanding nuanced prompts and generating comprehensive responses.

Furthermore, the post-training phase integrated supervised fine-tuning and reinforcement learning techniques to align the model’s behavior with human preferences. This meticulous process is crucial for optimizing the model’s reasoning capabilities and ensuring that it meets the expectations of various users.

A significant highlight of DeepSeek-V3’s development lies in the cost-effectiveness of its training process. By employing advanced hardware and algorithmic optimizations, such as FP8 mixed precision training and the DualPipe algorithm for parallelism, DeepSeek managed to complete the full training of DeepSeek-V3 in approximately 2,788K H800 GPU hours, costing around $5.57 million. This figure is starkly lower than the hundreds of millions typically associated with training large language models, such as the estimated expenditure of over $500 million for Meta’s Llama-3.1.

DeepSeek’s focus on reducing training costs without compromising performance demonstrates their commitment to making cutting-edge AI technology more accessible to a broader range of enterprises. It sets a new precedent for how AI models can be developed sustainably.

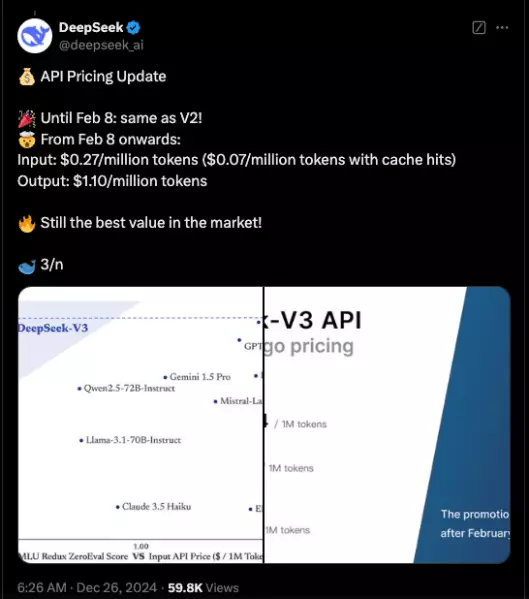

In performance comparisons, DeepSeek-V3 has exhibited remarkable results, even outperforming several leading open-source and closed-source models. According to benchmarks, it has surpassed Meta’s Llama-3.1 and matches up closely with industry stalwarts like OpenAI and Anthropic. Particularly impressive is its performance on tasks centering on Chinese language processing and mathematical problem-solving, where it achieved an outstanding score of 90.2 on the Math-500 test.

Despite its advantages, DeepSeek-V3 is not without competition. It faced challenges from Anthropic’s Claude 3.5 Sonnet on certain benchmarks, underscoring the intense rivalry in the AI market. However, the advancements demonstrated by DeepSeek-V3 signal a significant leap forward in equating the capabilities of open-source models with their closed-source counterparts.

The introduction of DeepSeek-V3 is a watershed moment for open-source AI development. By providing enterprises with access to a high-performing model that challenges proprietary solutions, DeepSeek fosters an environment of innovation and competition that benefits the wider industry. With their commitment to affordability, efficiency, and performance, DeepSeek is not only a player in the AI landscape but a catalyst for change, promoting a future where access to powerful AI tools is democratized. As companies look to harness AI for various applications, DeepSeek’s contributions will undoubtedly shape the trajectory of artificial intelligence development in the coming years.