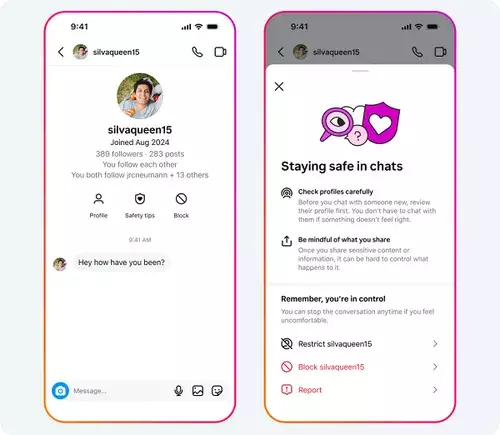

In an era where social media dominates the lives of young people, safety has become a paramount concern. Meta’s latest initiatives represent a strategic move towards more protective online environments for teenagers. These measures are not just reactive but show an intention to embed safety as a core feature of the social experience. By introducing user-friendly tools like “Safety Tips” directly within Instagram chats, Meta fosters awareness without disrupting the social flow. Such features serve as real-time educational prompts, helping teens recognize scams or predatory behavior promptly. This approach not only empowers the user but also subtly shifts responsibility onto the platform to act as a guardian, rather than a passive conduit for social engagement.

Meta’s simplified blocking and reporting functions further demonstrate a commitment to reducing friction in safeguarding kids. The combined block-report option condenses what was once a multi-step process into a single, seamless action. Such streamlining ensures that potentially harmful accounts are swiftly flagged and reviewed, minimizing their disruptive impact. This consolidation indicates Meta’s understanding that ease of use is crucial in encouraging young users to utilize these tools actively, rather than dismissing or neglecting their safety features. These incremental changes reflect a larger philosophy: making safety mechanisms intuitive enough that they become second nature for digitally savvy teens.

Addressing the Darker Realities of Teen Engagement

Despite these advancements, the platform’s ongoing battle with malicious actors—particularly those attempting to exploit minors—remains unresolved. Meta’s acknowledgement of removing hundreds of thousands of accounts involved in predatory behaviors highlights the persistent threat that these platforms face. Sexualized comments, solicitation, and manipulation attempts are not just occasional nuisances but systemic issues that require relentless vigilance. While Meta’s increased transparency in account removal provides some reassurance, it also underscores the scale of the problem. The reality is that harmful actors are constantly evolving tactics, and social media giants such as Meta must continuously refine their detection and prevention systems.

The company’s additional protections—including limiting who can message minors and hiding location data—are steps in the right direction, but are ultimately reactive tactics. They depend on the platform’s capacity to identify and eliminate offending accounts quickly. Yet, the sheer volume of activity and the sophistication of abusers pose a significant challenge. Digital safety cannot be a one-size-fits-all solution. Meta’s efforts should be complemented by broader societal initiatives and digital literacy programs to prepare teens for the dangers they face online. The platform’s measures, although significant, should be viewed as part of a larger ecosystem of child safety, not a sole remedy.

Balancing Safety with Autonomy and Development

While Meta’s enhancements are commendable, they also raise questions about autonomy versus oversight. The increased protections for teen accounts, especially adult-managed profiles, seem to swing the pendulum towards heightened supervision. On one hand, this reduces exposure to grooming and exploitation; on the other, it could potentially diminish teens’ sense of independence and control over their social space. Overprotectiveness might inadvertently foster a feeling of mistrust or paternalism, which can be counterproductive for fostering respectful, responsible online behavior.

Moreover, Meta’s support for raising the minimum age for social media access in the EU reveals an awareness of the delicate balance needed. It recognizes that restricting access based on age can serve as a protective barrier, but it also enters a gray area of enforcement and individual rights. While protecting minors from harmful influences is vital, there is a risk of creating a divide where younger teens feel excluded or less trusting of social platforms. The challenge lies in designing safety features that empower teens without making them feel surveilled or infantilized. Achieving this balance requires a nuanced understanding of adolescent development, digital literacy, and the social needs of teenagers.

The technological armaments that Meta has assembled reflect an acknowledgment of its responsibility to protect young users. Yet, the real question is whether these are meaningful steps towards genuine safety or mere public relations maneuvers. Implementing convenience features and expanding protective measures is a positive move, but the social media landscape’s darker underbelly calls for more profound, systemic reform. The platform must go beyond reactive safety nets and invest in shaping a digital culture that values respect and responsibility.

In truth, Meta’s efforts are encouraging, but they are still playing catch-up in an environment that is evolving too rapidly. To truly advance, Meta must leverage artificial intelligence, foster cross-platform collaborations, and prioritize digital civics education for teens. Without a holistic, proactive approach, these safety features risk becoming symbolic gestures rather than transformative protections. As young users navigate the complex web of online interactions, platforms like Meta must take ownership of creating safer, more trustworthy digital spaces—not just for now, but for generations to come.