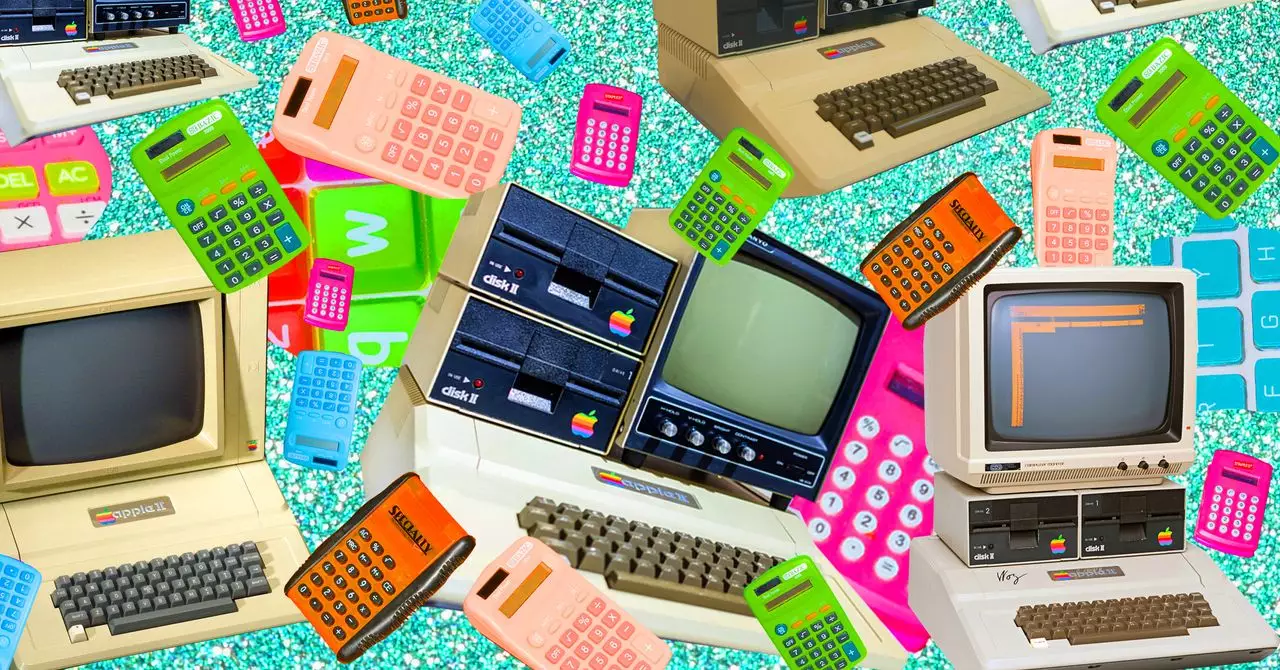

The journey of integrating technology into educational settings has often been portrayed as an inevitable march toward progress. Starting from Apple’s pivotal role in the early 1980s, with generous computer donations and supportive legislation, the narrative has consistently promoted the idea that more digital tools equate to better learning outcomes. The rapid increases in device-to-student ratios—from one computer for nearly every 92 students in 1984 to one device for each student by the early 21st century—highlight an almost relentless push for digitization. While these figures seem to signify modernization, a deeper critique exposes a complex web of assumptions that technology inherently improves education.

The reality is that the inclusion of computers, whiteboards, and internet access has often outpaced thoughtful pedagogical integration. As the adoption levels surged, questions arose about whether these investments genuinely translated into educational gains. The narrative that digital tools are universally beneficial has sometimes masked a troubling side effect: the dilution of core skills, especially when technology is treated as a panacea rather than an enhancement tool. It’s easy to assume that access alone equates to quality, but this overlooks the vital importance of effective teaching methods and curriculum design. Are we sacrificing depth for breadth? Are students truly engaging with material or merely consuming bits of information?

The Clash of Perspectives: Innovation Versus Skepticism

Despite the widespread enthusiasm, there has always been a voice of dissent questioning the overreliance on technology. Critics like A. Daniel Peck and others argue that the obsession with computers and digital devices could be a distraction from foundational skills like literacy, numeracy, and critical thinking. Their concerns are rooted in the observation that resources are sometimes diverted away from essential teaching practices toward acquiring shiny gadgets. Peck’s call to “stop the bandwagon” reveals a significant tension: the desire to innovate versus the apprehension that next-generation tech may not deliver on its promise.

This skepticism is not unfounded. When the focus shifts predominantly to hardware and software installations, questions emerge about whether teachers are receiving adequate training to integrate these tools meaningfully. Moreover, targeting mere device availability without considering pedagogical quality risks turning classrooms into showpieces of technology rather than centers of active learning.

Cost Versus Educational Value: Are We Investing Smartly?

Financial investment continually plays a crucial role in this debate. The cost of interactive whiteboards, which rose between $700 and over $4,500 per unit in 2009, exemplifies the high stakes involved. The enthusiasm for such technology often veers into expenditures that may not always align with proven educational outcomes. While many schools have adopted whiteboards and internet access, the question remains: are these tools used effectively, or are they simply status symbols?

Furthermore, the explosion of internet access—bolstered by programs like the FCC’s E-Rate—has expanded digital reach but also intensified scrutiny. Critics argue that excessive internet use can distract rather than educate. The promise of limitless information comes with the peril of superficial learning, cyberbullying, and exposure to inappropriate content. The cost-benefit analysis isn’t always straightforward; funding might be better allocated to teacher training, curriculum refinement, or smaller class sizes rather than technology alone.

The Irony of Technological Hype: Progress or Pitfall?

The narrative that the advent of the web, multimedia projectors, and other digital aids inherently improves education reflects a human tendency to idolize novelty. The hype surrounding the internet, fueled by the launch of Mosaic and subsequent browser innovations, initially promised a revolution that would democratize knowledge. Yet, by 2001, despite widespread access, educational disparities persisted, and the deep, critical engagement so vital to learning was often absent.

This disconnect underscores a vital insight: technology is not a substitute for effective teaching. It can be a powerful tool when wielded thoughtfully, but when adopted blindly out of enthusiasm or policy mandates, it risks becoming an expensive distraction. As educators and policymakers grapple with these realities, there’s a growing need to balance technology integration with foundational pedagogical principles. Without this balance, the promise of digital education may remain unrealized, or worse, become an additional source of inequity and superficiality.

In the end, the question is not whether technology belongs in classrooms but how it should serve the art of teaching. As the educational landscape continues to evolve, the critical eye must focus on whether these tools are actually enhancing understanding, fostering critical thinking, and preparing students for a complex world. Only through such scrutiny can we avoid the pitfalls of technological overreach and ensure that progress truly benefits learners.