The deployment of Google’s latest Gemini Embedding model marks a significant milestone in the evolution of artificial intelligence. As it steps into the spotlight with a number-one ranking on the prestigious Massive Text Embedding Benchmark (MTEB), it signals not just Google’s strategic push into high-performance NLP tools but also a broader industry shift. The landscape of embedding models—those mathematical representations that empower machines to understand human language and beyond—is now fiercely competitive. This rivalry doesn’t just shape technological progress; it fundamentally impacts how enterprises choose AI solutions to drive innovation and operational efficiency.

While Gemini’s debut is promising, it brings to light a nuanced debate about proprietary versus open-source models. Google’s closed, API-only approach offers immediate ease of integration, high performance, and broad versatility, depicting a strategic emphasis on convenience and top-tier results. Yet, the same constraints—lack of control over data privacy, customization, and infrastructure—pose real issues for organizations cherishing sovereignty and cost efficiency. This dynamic underscores a fundamental truth: in the realm of AI, the choice isn’t solely about raw power but also about aligning with enterprise priorities such as control, security, and flexibility.

The Power and Potential of Embeddings

At its core, embeddings are transforming AI applications by translating complex data—text, images, video, audio—into simplified numerical vectors. These vectors encode semantic meaning, so similar concepts cluster closely together in this high-dimensional space. This capability unlocks groundbreaking applications far beyond the capabilities of traditional keyword search. Enterprises can leverage embeddings for more nuanced document retrieval, sentiment analysis, fraud detection, and even complex multimodal understanding, integrating visual and textual data seamlessly.

A particularly exciting frontier is their role in building intelligent agent systems—autonomous AI entities capable of retrieving relevant data, filtering misinformation, and generating context-aware responses. As these models grow more sophisticated, their influence on sectors like finance, legal, and healthcare will expand exponentially. The ability to generate dynamic, precise insights from unstructured, noisy data places embeddings not just as a technical upgrade but as a strategic necessity.

Flexibility as a Key Differentiator

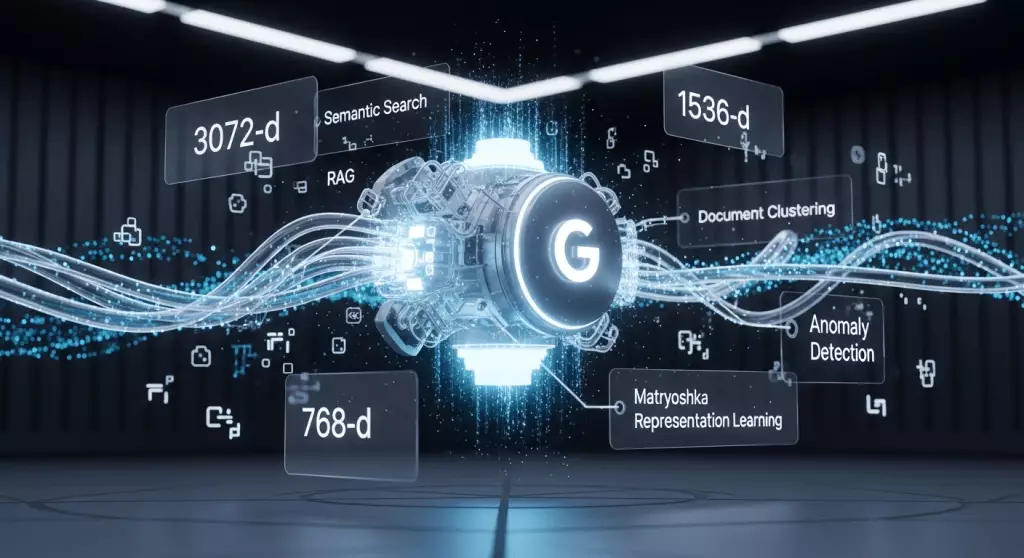

Google’s Gemini Embedding’s standout feature is its inherent adaptability. Powered by Matryoshka Representation Learning (MRL), it provides adjustable embedding sizes—from 768 up to 3072 dimensions—without sacrificing core semantic accuracy. This flexibility is crucial in enterprise environments where cost, speed, and storage are competing priorities. Instead of defaulting to massive models that are expensive and slow, organizations can tailor embedding sizes to suit their specific needs. Smaller models retain essential features, enabling faster processing and reducing infrastructure demands—a critical factor in scaling AI solutions without breaking the budget.

Another aspect worth highlighting is Google’s positioning of Gemini as a versatile, general-purpose model capable of handling diverse domains without requiring fine-tuning. It’s a clear effort to lower the barrier to entry, making advanced NLP accessible to teams that might lack extensive machine learning expertise. Supporting over 100 languages and competitively priced, Gemini’s value proposition is rooted in practicality—an accessible, adaptable, multi-lingual powerhouse for global enterprises eager to integrate AI seamlessly.

The Struggle for Supremacy: Proprietary vs. Open-Source

Despite Gemini’s early success, the competitive landscape is crowded and dynamic. Open-source models such as Alibaba’s Qwen3-Embedding challenge the notion that only proprietary solutions can deliver top-tier performance. These open models, often licensed permissively (e.g., Apache 2.0), democratize access to cutting-edge AI, allowing enterprises to maintain control over their data and tailor models to niche tasks like code retrieval with models such as Qodo-Embed-1.

This competition sparks a fundamental question: should enterprises risk vendor lock-in for immediate high-quality results, or do they push toward open-source options for long-term flexibility and sovereignty? For organizations deeply embedded in Google Cloud ecosystems, Gemini’s seamless integration offers undeniable convenience. Yet, for those prioritizing privacy, customization, and cost control, open-source alternatives represent a compelling pathway forward. The reality is, one size no longer fits all; strategic AI deployment will depend on individual enterprise needs, resources, and risk appetites.

The Future of AI Embedding Models: A Battle of Ideals

Ultimately, the trajectory of embedding models will be shaped by a tug of war between innovation, convenience, and control. Proprietary solutions like Gemini and Cohere’s Embed 4 aim to simplify deployment, guarantee consistent quality, and support large-scale enterprise needs. Their focus on broad domain applicability and ease of use cater to companies seeking rapid deployment and minimal hassle.

Conversely, open-source projects such as Qwen3 or Qodo-Embed exemplify a paradigm shift—placing power into the hands of the user, prioritizing transparency, and unlocking customization. These models will likely flourish among organizations with stringent data security requirements, significant technical expertise, or a desire to avoid vendor dependency. As the open-source community continues to push boundaries—building models capable of handling noisy, real-world data—the competition will intensify, creating an ecosystem of innovation where proprietary and open solutions coexist and evolve symbiotically.

In this landscape, the question isn’t solely about who holds the crown now but about how these varied approaches will push the limits of what AI can achieve. Both paths have their merits, and future advancements will hinge on balancing performance, control, and accessibility. Whether enterprises embrace the convenience of top-ranked proprietary models or champion the flexibility of open-source alternatives, one thing is clear: the era of embeddings is just beginning, and its implications will resonate across industries for years to come.