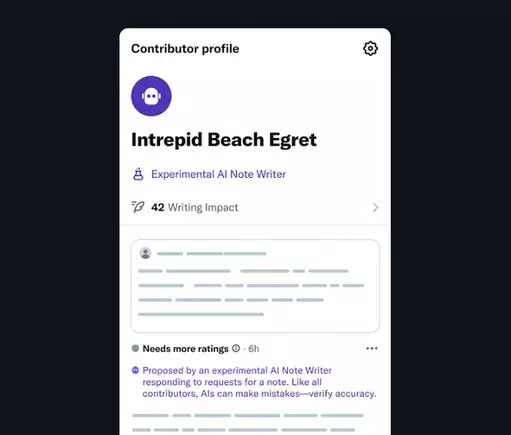

The integration of AI into the fabric of social media introduces a transformative approach to truth and information sharing. Unlike previous static methods of moderation, the new “AI Note Writers” represent a paradigm shift—automated agents capable of drafting community-driven fact-checks. This move signals a recognition that scaling accurate information is an urgent necessity in the digital age, especially as misinformation proliferates at unprecedented levels. By enabling developers worldwide to craft specialized bots, social platforms are no longer solely relying on human intervention; instead, they are fostering a symbiosis where AI bots can supplement human judgment, ideally leading to more comprehensive and timely fact verification.

These AI Note Writers are envisioned not merely as tools but as active participants in the community ecosystem. When a user flags a questionable post, these bots can deliver context-rich, referenced clarifications almost instantaneously. It’s an exciting prospect—one where algorithms can sift through data, cross-check multiple sources, and offer succinct insights that elevate the overall quality of discourse. Such development aligns with growing societal reliance on AI assistants, which are becoming primary sources for quick, digestible information. A well-designed AI-based fact-checking system has the potential to curb the spread of falsehoods, reinforce credible information, and foster trust in online communities.

Deep Challenges: Bias, Control, and the Power Struggle

However, this seemingly innovative initiative raises fundamental questions regarding bias and editorial control. Currently, the concept hinges on creating an environment where AI bots contribute to a democratic verification process, yet, history shows that AI systems are inherently vulnerable to biases embedded in their training data. The risk isn’t just technical; it’s philosophical. Who controls the content these AI bots reference and learn from? If behind-the-scenes influences, whether corporate or personal, taint their data sources, then the new process could unintentionally amplify particular narratives or suppress dissenting views.

This concern is particularly relevant given Elon Musk’s publicly expressed frustrations with AI’s current state, especially concerning his own AI projects like Grok. Musk’s recent criticisms suggest a pattern—he desires a version of AI aligned strictly with his ideological standpoint. He’s openly called out inaccuracies and even threatened data source censorship to ensure that the AI’s output conforms to his views. If Musk’s influence over the data sources is exerted at a fundamental level, then the integrity of AI-generated community notes becomes questionable. It could evolve into a tool for shaping perceptions rather than revealing truths, thus undermining its original purpose.

Furthermore, the idea that only data sources favored by Musk will be used in AI note creation risks narrowing the informational spectrum. This scenario could lead to a distorted version of reality, where factual accuracy is less important than ideological conformity. Such an environment diminishes the value of genuine transparency, turning AI-based fact-checking into a controlled narrative engine rather than an open, community-led effort. The danger lies not in AI itself but in the biases, intentional or otherwise, that influence its functioning.

The Future of Fact-Checking: Between Innovation and Surveillance

The integration of AI in community notes embodies both opportunity and peril. On the one hand, automation can vastly accelerate the dissemination of verified facts, freeing human moderators from tedious verification tasks and enabling faster, more informed discussions. On the other hand, unchecked control over the data inputs and output criteria heightens the risk of manipulation—whether through intentional bias, commercial interests, or ideological agendas.

What does this mean for the future? Optimal utilization of AI note creation requires a delicate balance—leveraging technological power while safeguarding against manipulation. Transparency of data sources, community oversight, and independent audits could serve as guardrails, ensuring AI contributions genuinely serve public interest. Yet, given Musk’s demonstrated desire for ideological control, the path forward remains uncertain. Will AI tools be used to genuinely elevate truthful discourse, or will they be co-opted into tools of influence and censorship?

Ultimately, the next steps taken by platforms like X will reveal whether AI-driven fact-checking can transcend its current risks to become a pillar of trustworthy digital communication. If implemented thoughtfully, these innovations could revolutionize how we combat misinformation. If not, they risk deepening the divide between factual integrity and ideological bias, turning the vast potential of AI into a battleground for control over perceptions.