In recent years, artificial intelligence (AI) has made remarkable strides, yet the field of robotics remains mired in limitations. Much of the existing robotic technology, especially in industrial settings like factories and warehouses, involves machines performing rigidly defined tasks in a highly controlled environment. These robots execute their programmed routines with precision, but they lack the adaptability to respond to unexpected changes or challenges in their surroundings. This limitation illustrates a fundamental challenge in robotics: the inability to perceive and interact with their environment in a nuanced way.

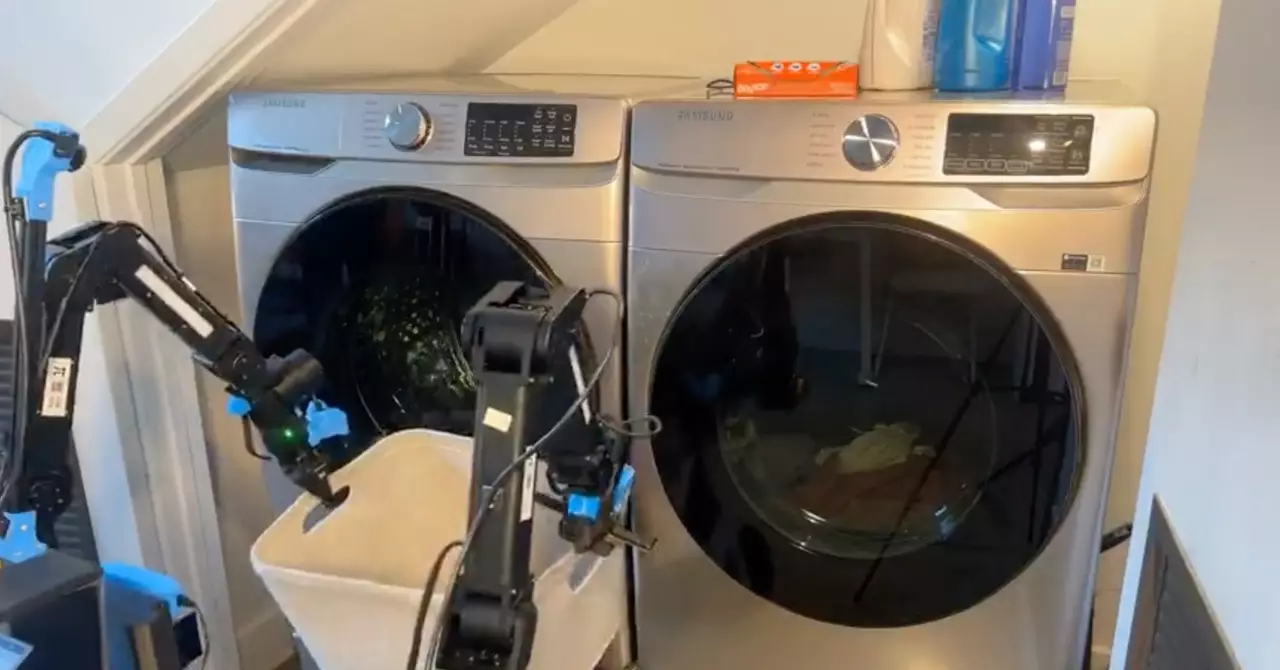

While a handful of industrial robots boast the capability to see and manipulate objects, their dexterity is generally quite restricted. They are designed for specific tasks and possess little in the way of general physical intelligence, which is crucial for handling a diverse array of responsibilities. Thus, the potential for implementing robotics in more variable and chaotic environments, such as domestic homes, remains largely untapped.

The prevailing excitement surrounding advancements in AI has teetered into an optimistic expectation for breakthroughs in robotics. High-profile initiatives, such as Tesla’s development of the humanoid robot Optimus, signify a potential leap forward. Musk has stated his ambition for this robot to be available at a price point between $20,000 and $25,000 by 2040, asserting it will be capable of performing a vast array of tasks. However, one must approach such claims with a measure of skepticism; the complexities involved in achieving generalizability in robotics are profound.

Many past efforts in robot learning have been limited to training single machines on individual tasks, primarily due to the perceived non-transferability of learned skills across different scenarios. However, recent academic explorations suggest a shift in this paradigm, demonstrating that with significant scale and nuanced understanding, robots may learn transferable skills.

A noteworthy advancement in this arena is Google’s 2023 initiative, Open X-Embodiment, which explored the sharing of learned behaviors among 22 distinct robots across 21 research laboratories. This project underscores the potential for collaborative learning in robotics, yet it also highlights a significant hurdle: the scarcity of robust data for training robots. Unlike the vast datasets available for language models, robotic datasets are still emerging, requiring companies to devise original methodologies to generate data conducive to learning.

For instance, the company Physical Intelligence regards this challenge as a pathway to innovation. By integrating vision language models—which combine ocular data with textual information—with diffusion modeling techniques derived from the domain of AI-generated imagery, they aim to cultivate a more versatile approach to robotic learning.

Achieving proficiency in a range of robotic tasks hinges on the ability to enhance learning mechanisms and expand data accessibility. While the road ahead may be fraught with challenges, there exists a burgeoning optimism about a future where robots can truly operate in environments requiring more than just rote memorization—where they exhibit a form of intelligence that parallels human adaptability. As one researcher aptly noted, “There’s still a long way to go, but we have something that you can think of as scaffolding that illustrates things to come.” Indeed, the promise of advanced robotics lies in their potential to evolve into tools that can seamlessly integrate into various aspects of human life, provided significant strides are made in the foundational technologies that underpin their operation.