Artificial intelligence has long been heralded as a transformative technology capable of revolutionizing content creation, automation, and even societal norms. Yet, beneath the promise of innovation lies a troubling reality: many AI platforms, especially those involved in creative and visual content generation, often fall short in implementing robust safeguards. The recent surge of AI tools that generate images and videos has exposed significant gaps in regulation and ethical responsibility. While some companies take steps to moderate content, others seem content to neglect or obscure these responsibilities, creating a fertile ground for misuse and harm.

This disparity is particularly evident in the realm of AI-powered video and image generators that enable users to produce NSFW content, deepfakes, and celebrities’ likenesses with minimal restrictions. The allure of unrestricted creative freedom is undeniable, but the consequences of such unchecked power can be dire. These tools often operate with blatant disregard for potential misuse, raising questions about the commitment of developers to ethical standards and societal well-being. By promoting and enabling suggestive or explicit content with just a few clicks, these platforms blur the lines between innovation and irresponsibility.

Gaps in Regulation and Accountability

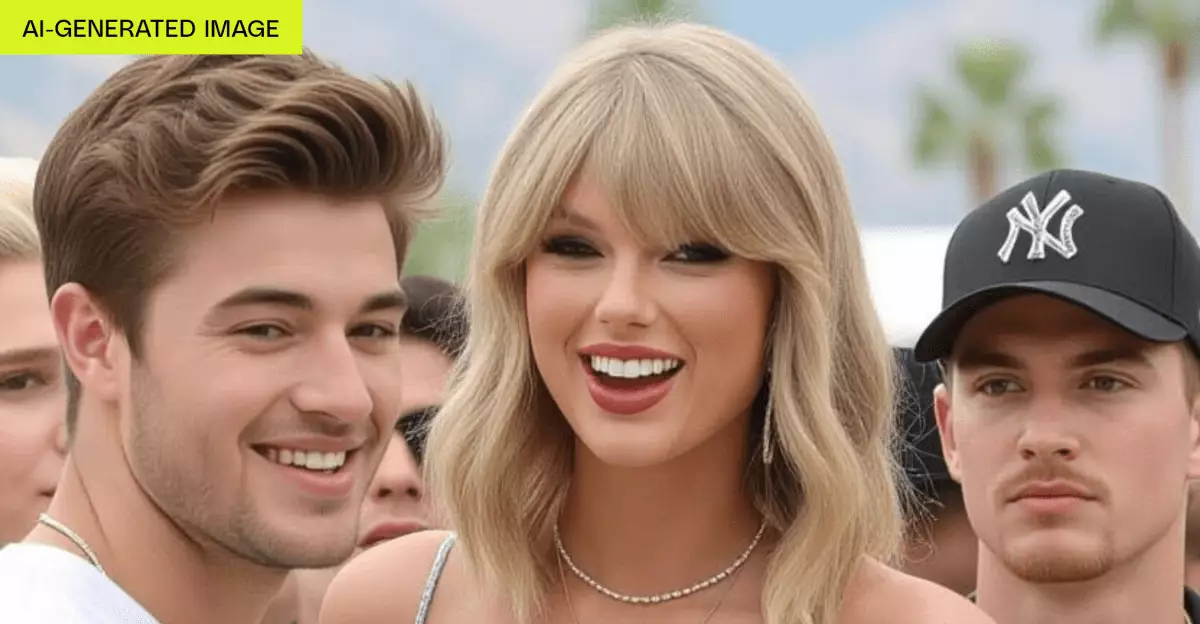

The case of Grok Imagine exemplifies the alarming neglect of safety measures in AI content generation. Despite existing policies—such as the Take It Down Act—which aim to regulate deepfake and NSFW content, Grok’s implementation appears superficial at best. When users generate content featuring celebrities like Taylor Swift, the platform seemingly does little to prevent the creation of suggestive or explicit depictions. The availability of a “spicy” preset, which often results in nudity or provocatively suggestive images, underscores a troubling complacency toward potential harm.

What’s more concerning is how easy it is to bypass age and verification checks. The system, designed in a way that requires minimal effort from users, allows anyone—even underaged users—to access and generate potentially harmful content. This loophole effectively undermines regulatory efforts and places vulnerable populations at risk, from minors to individuals targeted or misrepresented through deepfake technology. The lack of rigorous safeguards hints at a calculated approach that prioritizes user engagement over societal responsibility.

The Ethical Vacuum of Unregulated AI Development

Companies developing generative AI tools often pay lip service to ethical standards, yet their practices suggest otherwise. The disparity between stated policies—such as bans on pornographic depictions—and actual platform functionalities is stark. When AI tools like Grok Imagine do not implement enough technical barriers to prevent misuse, it demonstrates a dangerous ethical vacuum. The temptation for users to push boundaries, explore forbidden content, or manipulate celebrity images without repercussions becomes all too easy.

This situation highlights a fundamental flaw: the absence of effective, enforceable safeguards that protect individuals and society at large. AI developers appear to be caught in a cycle of rapid deployment—prioritizing feature release and user engagement over safety assurance. As a consequence, these tools contribute to a normalization of content that can perpetuate harmful stereotypes, exploit individuals, or distort reality with impunity.

The Need for Accountability in the Age of AI

The current landscape demands not just technological safeguards, but also a moral reconsideration of the role AI developers play. As AI-generated content becomes more realistic and accessible, the ethical stakes intensify. It’s incumbent upon developers to embed safety nets—such as strict content moderation, verified age checks, and violations penalties—into their platforms from the outset. Rushing to market with minimal regulation is not only reckless, it ultimately erodes public trust in the very tools meant to enhance human creativity.

In an era where AI’s potential for good exists alongside risks of exploitation and harm, we must hold developers accountable. This involves not only adhering to legal standards but fostering a culture of responsibility. AI creators should recognize that they are custodians of societal values, and their innovations must serve to uplift rather than undermine societal norms and individual rights. Neglecting this duty invites chaos, harm, and a future in which the line between reality and fabrication becomes irreparably blurred.