In a world increasingly dominated by artificial intelligence (AI), the line between convenience and privacy is becoming more blurred than ever. Recently, Meta has rolled out new functionality that integrates its AI capabilities into private messaging on platforms like Facebook, Instagram, Messenger, and WhatsApp. While this advancement may offer intriguing possibilities for users, it raises pressing concerns about data privacy. This article dissects the implications of this integration, the measures users can take to protect their information, and the broader context of privacy in the digital age.

Meta’s latest feature allows users to summon AI responses within their ongoing chats, informing them that they can now engage with its AI simply by referencing @MetaAI. The appeal lies in the seamless integration of AI assistance into everyday communication. However, this convenience comes with an important caveat: any information shared within these conversations could potentially be utilized for AI training purposes. As Meta warns, sensitive information such as passwords or financial details should not be shared in chats if users are wary of it being processed by AI.

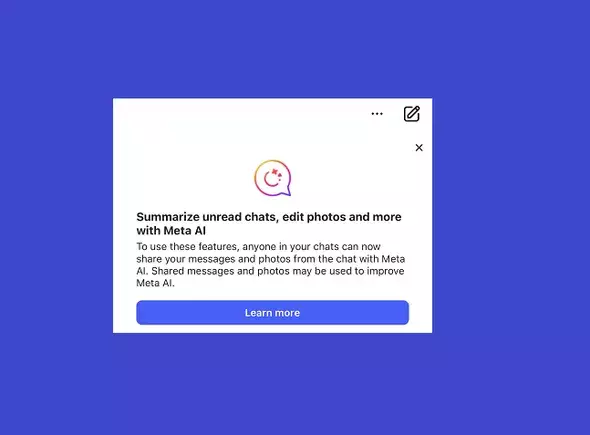

It’s essential to recognize that the arguments for this AI integration often focus on enhanced user experience. Yet, the potential for data misuse looms ominously. Users are often encouraged to embrace technology without being fully aware of the lurking risks. Meta’s solution to this dilemma appears to be transparent— through pop-ups that advise users to exercise caution in sharing sensitive information. Nonetheless, merely providing a warning does not negate the potential ramifications of integrating AI into private chat spaces.

One of the most contentious aspects of this new feature is the issue of user consent. Users often scroll through lengthy terms and conditions without fully absorbing their content. Many might be surprised to learn that, by using Meta’s suite of applications, they have already consented to their data being used in the manner described. The implications are significant—users cannot opt out simply by stating their disapproval in a post or message. Thus, the system has been designed in such a way that shifting the responsibility to the user feels both disingenuous and burdensome.

This raises a broader question: Are tech giants like Meta effectively taking advantage of user naivety? In a landscape where digital communication has become indispensable, users are often left feeling cornered, as they need to choose between convenience and security. The reality is that avoiding the usage of Meta AI in chats may be the only certain way to retain privacy—essentially asking users to compromise on potential enhancements to their digital experiences.

The introduction of AI in private messaging indicates a significant pivot in how data privacy is approached. With Meta emphasizing the transformative potential of its AI features, it disregards the unease that many users feel regarding the erosion of their privacy. The likelihood of an AI system inadvertently recreating a user’s personal information from chat logs, while statistically minimal, does exist.

Moreover, the implications of shared content in group chats magnify these privacy concerns. If one user opts to utilize Meta AI, they could inadvertently expose sensitive information shared by others, creating a ripple effect that broadens the potential for data misuse. This interplay illustrates a critical flaw within the system and emphasizes the need for a robust framework to safeguard user data in a digitized world.

To navigate this evolving landscape, users must adopt a proactive stance regarding their digital communications. There are several actionable measures that individuals can take:

1. **Limit Sharing Sensitive Information**: Users should always be mindful of the type of information they share in any chat, especially when AI tools are involved.

2. **Separate AI Interactions**: Engaging with Meta AI in standalone chats rather than mixing it with personal conversations could mitigate risks.

3. **Educate and Advocate**: Users should take the time to educate themselves on the terms of service and advocate for stricter privacy policies within digital platforms.

While Meta’s new AI capabilities may enrich user experience, it is crucial that caution be exercised when engaging with these technologies. Users should remain vigilant about their privacy and take steps to safeguard their personal information as they navigate the duality of convenience and confidentiality in an increasingly AI-driven world. Balancing innovation with ethical responsibility will be essential in determining the future of user trust in digital communication platforms.