In the rapidly evolving landscape of artificial intelligence, size has often been equated with power. The latest language models, boasting hundreds of billions of parameters, represent a monumental stride in the capabilities of machines to learn and respond to human language. Major players like OpenAI, Google, and Meta have dazzled us with their behemoth models, showcasing unprecedented accuracy and nuance in language comprehension. Yet, this expansion comes with a caveat: the financial and environmental costs associated with training and operating these colossal systems. As reliance on such models grows, so too does the urgency for sustainable solutions that prioritize efficiency without sacrificing performance.

To put it simply, while large language models (LLMs) push the boundaries of AI, the dawn of small language models (SLMs) presents a compelling alternative. These more compact models utilize only a fraction of the parameters found in their larger counterparts. Their rising prominence prompts a reevaluation of what constitutes power in the realm of AI.

Small Language Models: A Pragmatic Approach

The inception of SLMs represents a paradigm shift in how researchers approach machine learning tasks. Models with around ten billion parameters—many considerably smaller—are demonstrating impressive capabilities in targeted applications such as healthcare, customer service, and data retrieval. The reduced parameter count not only facilitates easier training but also allows for deployment on devices as ubiquitous as smartphones, drastically widening the potential user base and application.

Zico Kolter, a computer scientist at Carnegie Mellon University, articulates a critical insight: for numerous specific tasks, models containing eight billion parameters can yield commendable results. This assertion both challenges the notion that “bigger is better” in machine learning and highlights the untapped promise of SLMs.

By shifting the focus from sheer size to task-oriented efficiency, researchers can harness the benefits of high-quality data. Large models can curate refined datasets, effectively imparting their knowledge through a process known as knowledge distillation. In this scenario, the larger model acts as an educational force, instilling accuracy in a smaller model that subsequently thrives on cleaner, more relevant training data.

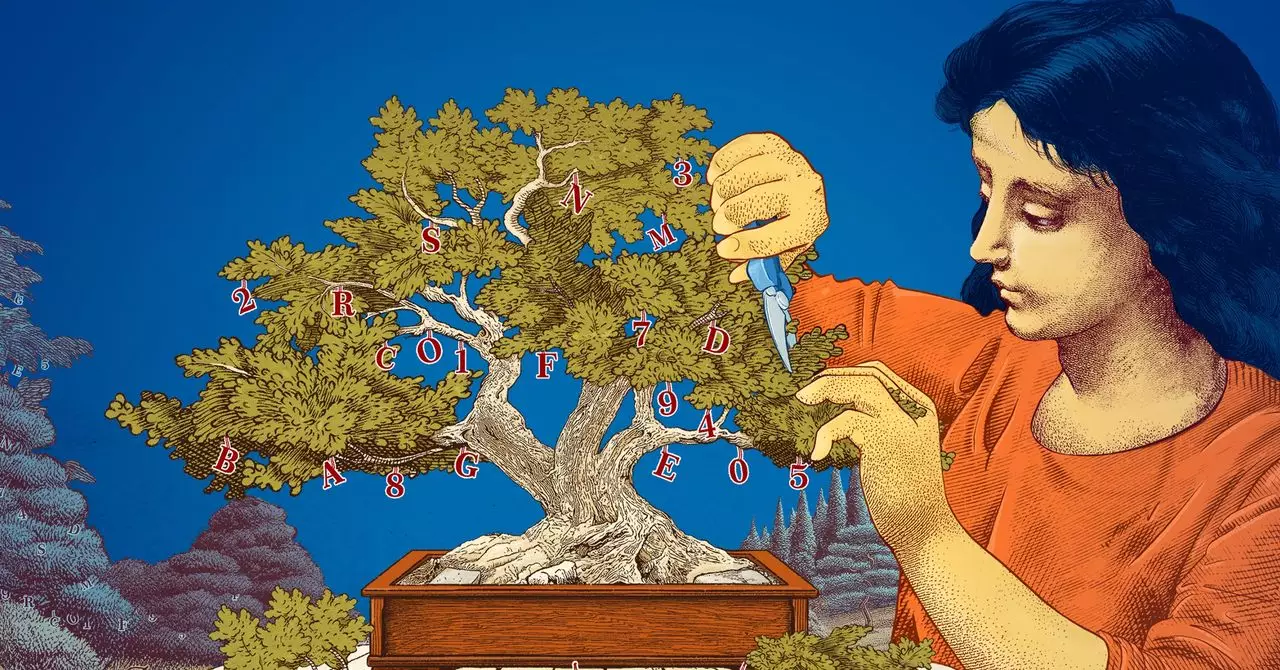

Efficiency Through Pruning

Another intriguing avenue in the creation of SLMs is the concept of pruning. In the realm of neural networks, this method strips away the unnecessary connections that can muddle the efficiency of a model. Inspired by human neural networks, where synaptic connections evolve and diminish with experience, pruning serves a dual purpose of optimization and specialization. By discarding superfluous links, researchers can tailor small models to excel in specific contexts, maintaining high-performance levels without incurring the costs associated with larger models.

Historically, this method traces back to pioneering research by Yann LeCun, who posited that vast segments of a trained model’s parameters could potentially be eliminated without detriment to performance. The counterintuitive nature of this approach—sacrificing quantity for quality—offers a refreshing perspective on model design, aligning more closely with human cognitive processes.

Testing Grounds for Innovation

Beyond their operational advantages, SLMs afford researchers an opportunity to experiment with greater freedom and lower risks. With fewer parameters at stake, small models serve as an accessible testing ground for new ideas, ultimately fostering innovation in AI methodologies. As Leshem Choshen from the MIT-IBM Watson AI Lab asserts, these compact systems revolutionize how researchers can plan and execute experiments, effectively lowering the stakes and broadening the scope of exploration.

This experimental ethos enables researchers to rethink AI strategies, moving towards a more sustainable and responsible approach in a field often criticized for its resource-intensive nature. Enabling diverse avenues for discovery without the heavy investment associated with larger models may pave the way for a more inclusive and dynamic research landscape.

The Future of AI: Balancing Power and Responsibility

As we look towards the future, it is essential to appreciate the complementary role that small language models will play alongside their larger siblings. While LLMs will continue to dominate complex applications like drug discovery or large-scale content generation, the case for SLMs is increasingly compelling. The efficiency, accessibility, and transparency they offer could democratize AI, opening pathways for smaller organizations and individual developers to engage with cutting-edge technology.

The discourse surrounding AI is shifting. The goal is not just to achieve high performance through sheer power, but also to create models that are sustainable and equitable. As we forge ahead through the 21st century, balancing ambition with responsibility will be key for researchers and developers alike. Small language models give us a glimpse of what an inclusive AI future might look like—one where efficiency and purpose are prioritized just as much as size and scale.