As artificial intelligence continues to make strides in language processing, developers are presented with a myriad of approaches to customize large language models (LLMs) for specific tasks. Fine-tuning and in-context learning (ICL) stand out as two leading methodologies that can drastically affect the performance of LLMs in real-world applications. Recent research from Google DeepMind and Stanford University has illuminated these approaches, revealing insights that may shape the future of how developers fine-tune models to tackle complex problems effectively.

At the crux of this research is the crux of generalization—how well a model can apply learned knowledge to new, unseen situations. The findings indicate a significant edge for ICL, demonstrating greater flexibility in learning, albeit at a higher computational expense during inference. This nuanced insight calls for a careful consideration of which approach to leverage based on the task’s specific requirements, and the stakes are particularly high when dealing with proprietary enterprise data.

Understanding Fine-Tuning and In-Context Learning

Fine-tuning is the traditional methodology that involves taking a pre-trained LLM and refining its parameters by exposing it to a smaller, domain-specific dataset. The primary goal is to instill new knowledge or hone particular skills relevant to the desired outcomes. On the surface, fine-tuning appears to be an efficient route, but it often falls short when models encounter unfamiliar tasks outside their trained scope.

In stark contrast, ICL operates differently; it refrains from altering the model’s internal parameters. Instead, it enriches the model’s input with relevant examples directly within the prompt. This method allows the model to leverage contextual insights without the need for extensive retraining and can lead to surprisingly robust results. However, the researchers found that this approach demands significant computational resources, raising pivotal questions about efficiency and scalability.

The Research Framework: Rigorous Testing of Generalization

To empirically evaluate the generalization abilities of fine-tuning and ICL, the researchers designed stringent tests using controlled synthetic datasets. These datasets were ingeniously crafted to possess complex, self-consistent structures—such as fictional hierarchies or imaginary family trees—disguised under nonsensical vocabularies. This precaution ensured that the models would not rely on previously learned patterns, but instead demonstrate their ability to generalize from newly structured information.

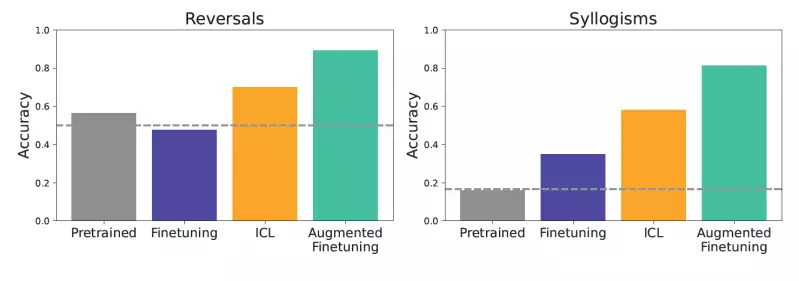

The tasks varied from basic reversals to complex syllogisms, evaluating the models’ capacity to infer relationships and deductions. The benchmark endeavors not only highlighted ICL’s superiority in flexible generalization but also underscored the limitations of models relying solely on standard fine-tuning techniques. In scenarios where contextual learning was emphasized, ICL consistently outperformed its counterpart, thus reinforcing the argument for its adoption in practical applications.

The Trade-offs: Computational Costs vs. Performance

Despite the promising results associated with ICL, it is essential to grapple with the trade-offs involved—especially regarding computational demands. While fine-tuning represents a one-time cost incurred during the training phase, ICL involves recurring costs every time the model is called upon. This raises the question: how do organizations balance the immediate financial implications against the longer-term benefits of improved performance and adaptability?

As Andrew Lampinen, a research scientist at Google DeepMind, pointed out, this calculus is contingent on specific use cases. For enterprises that require agility in processing diverse inputs—such as dynamic customer queries—the investment in ICL may yield considerable dividends. Conversely, organizations with a stable and predictable query landscape might lean toward fine-tuning due to its lower operational overhead.

A New Paradigm: Augmented Fine-Tuning

Amidst this exploration, the researchers proposed an innovative approach that marries the strengths of both methodologies: augmented fine-tuning. By leveraging ICL to generate varied, context-rich examples, enterprise developers can create a substantially more effective training dataset. This hybrid approach not only bolsters generalization capabilities but also alleviates the incessant costs associated with live ICL deployments.

This new paradigm includes two strategies: a local approach focused on individual information pieces and a global strategy that engages the broader dataset context. Both avenues promise a more enriched learning experience for the LLM, significantly enhancing its ability to respond accurately to complex queries. The idea that ICL can serve as a powerful ally in bolstering fine-tuning efforts opens up exciting avenues for future research and development.

The Path Forward: Implications for Enterprises

As enterprises explore these methodologies, the implications are profound. Organizations can create more resilient models capable of handling an array of nuanced tasks through augmented fine-tuning. This could lead to higher reliability in understanding user intent and responding appropriately, ultimately fostering better user experiences across various applications.

With ongoing advances in LLM technology, it is imperative that organizations remain agile, continually adapting their strategies for model customization. The intersection of fine-tuning and ICL holds enormous potential, and companies that embrace these insights stand to gain a competitive edge in the evolving landscape of AI-driven solutions. As we march forward, understanding and anticipating the potential of these advanced methodologies will be crucial in shaping the next generation of language model applications.